What are GitHub Models?

GitHub Models is a workspace built into GitHub for working with large language models (LLMs). It supports prompt design, model comparison, evaluation, and integration—directly within your repository.

Main capabilities:

- Create prompts using a structured editor

- Run prompts against different LLMs using the same inputs

- Apply built-in evaluators to score responses

- Save configurations as

.prompt.yamlfiles - Connect via SDKs or the GitHub Models REST API

GitHub Models is currently in public preview for organizations and repositories.

Why use GitHub Models?

GitHub Models enables teams to build and evaluate AI-powered features without leaving their development workflow. It allows for:

- Designing and iterating on prompts in the GitHub UI

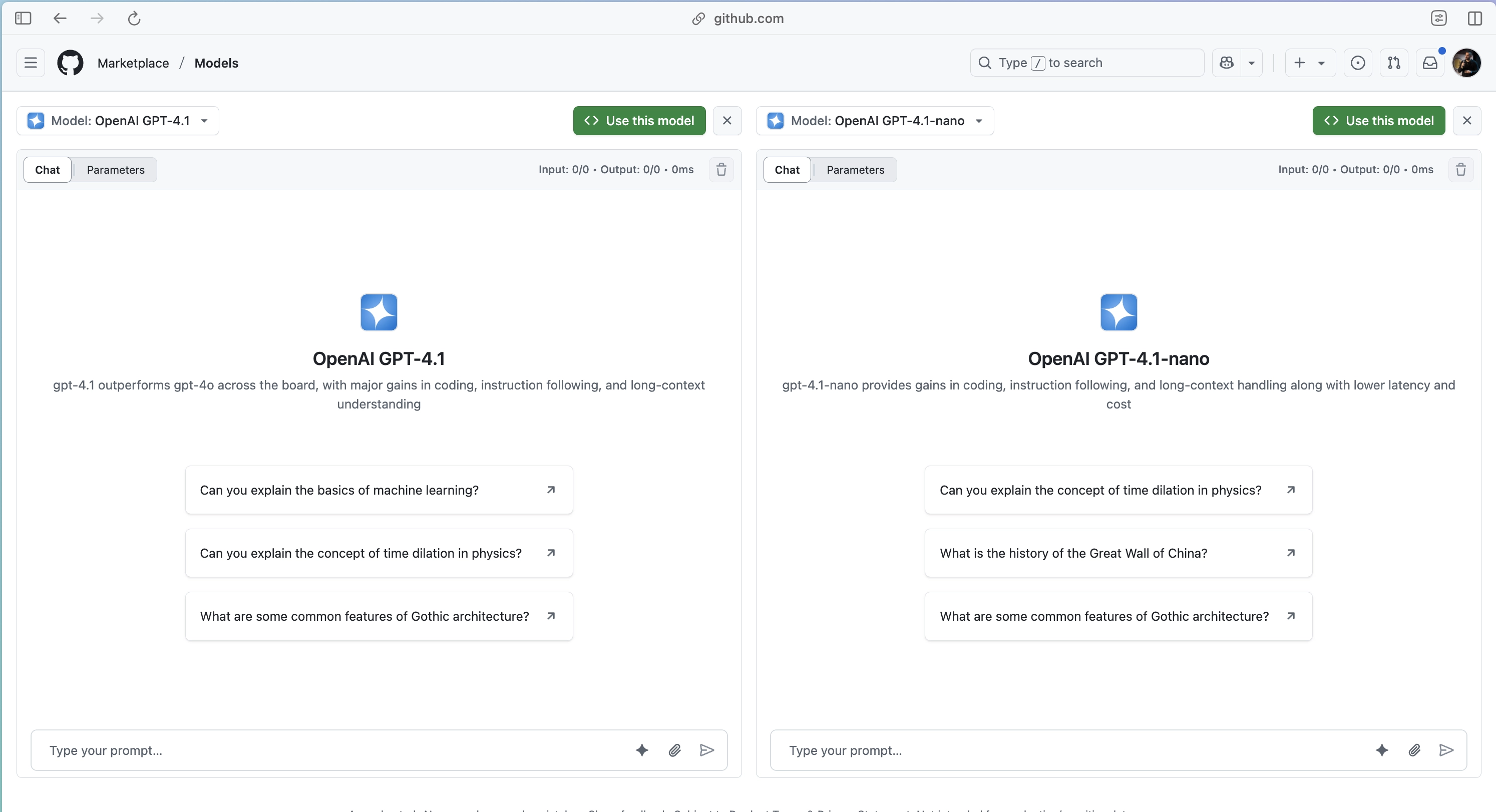

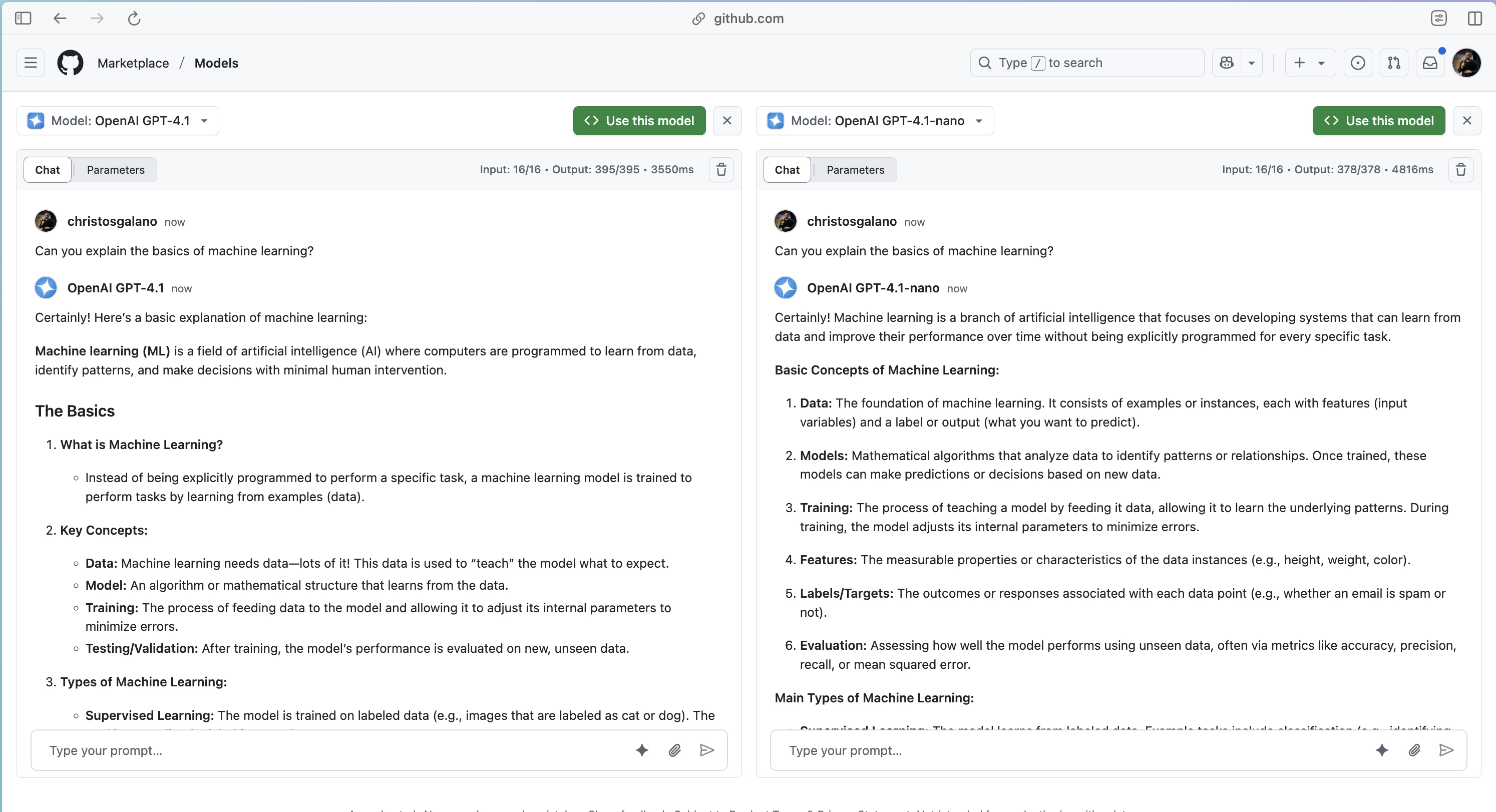

- Running side-by-side model comparisons

- Version-controlling prompts like code

- Sharing, reviewing, and reusing configurations

- Using structured evaluations to guide improvements

Getting Started

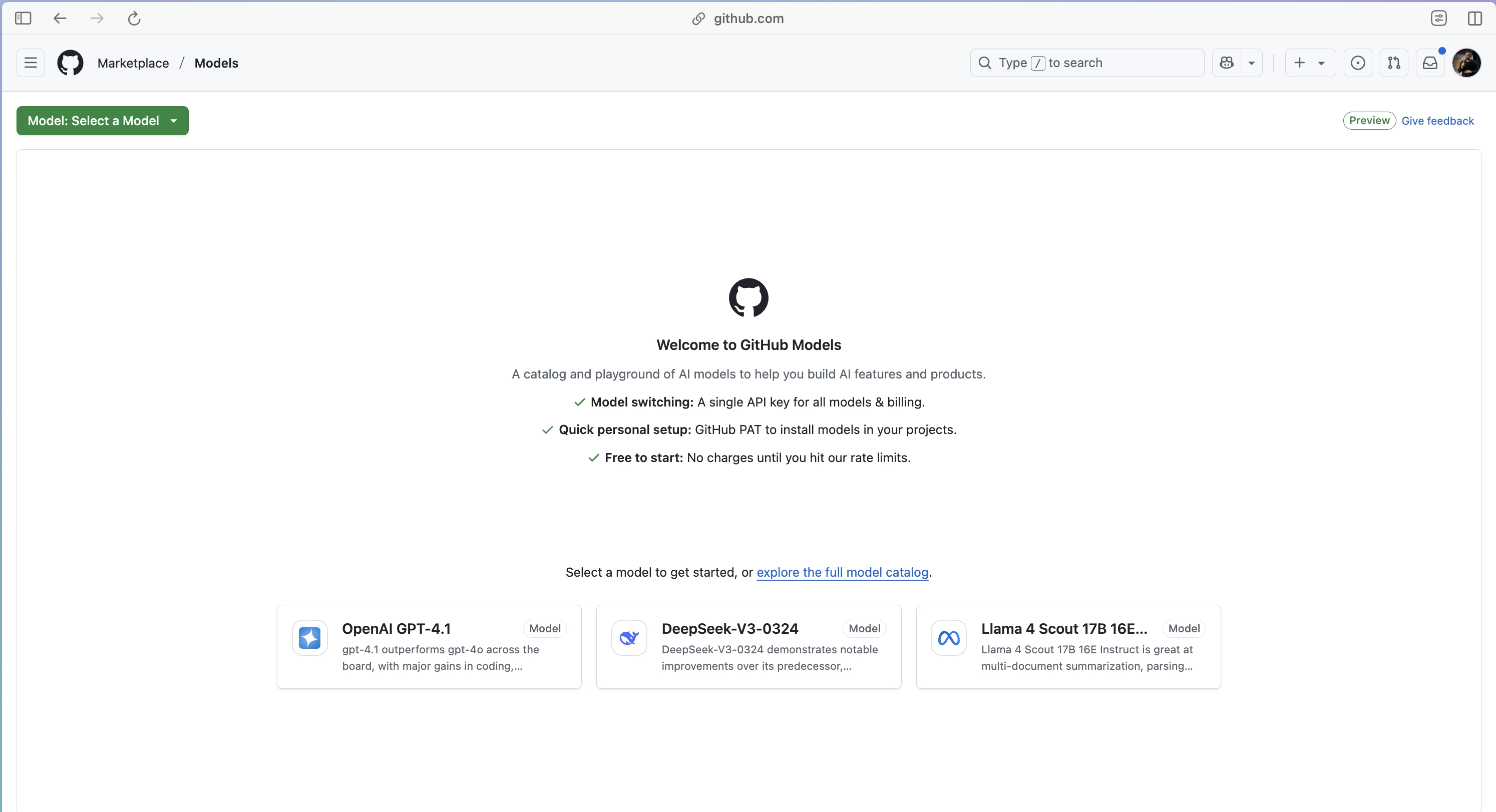

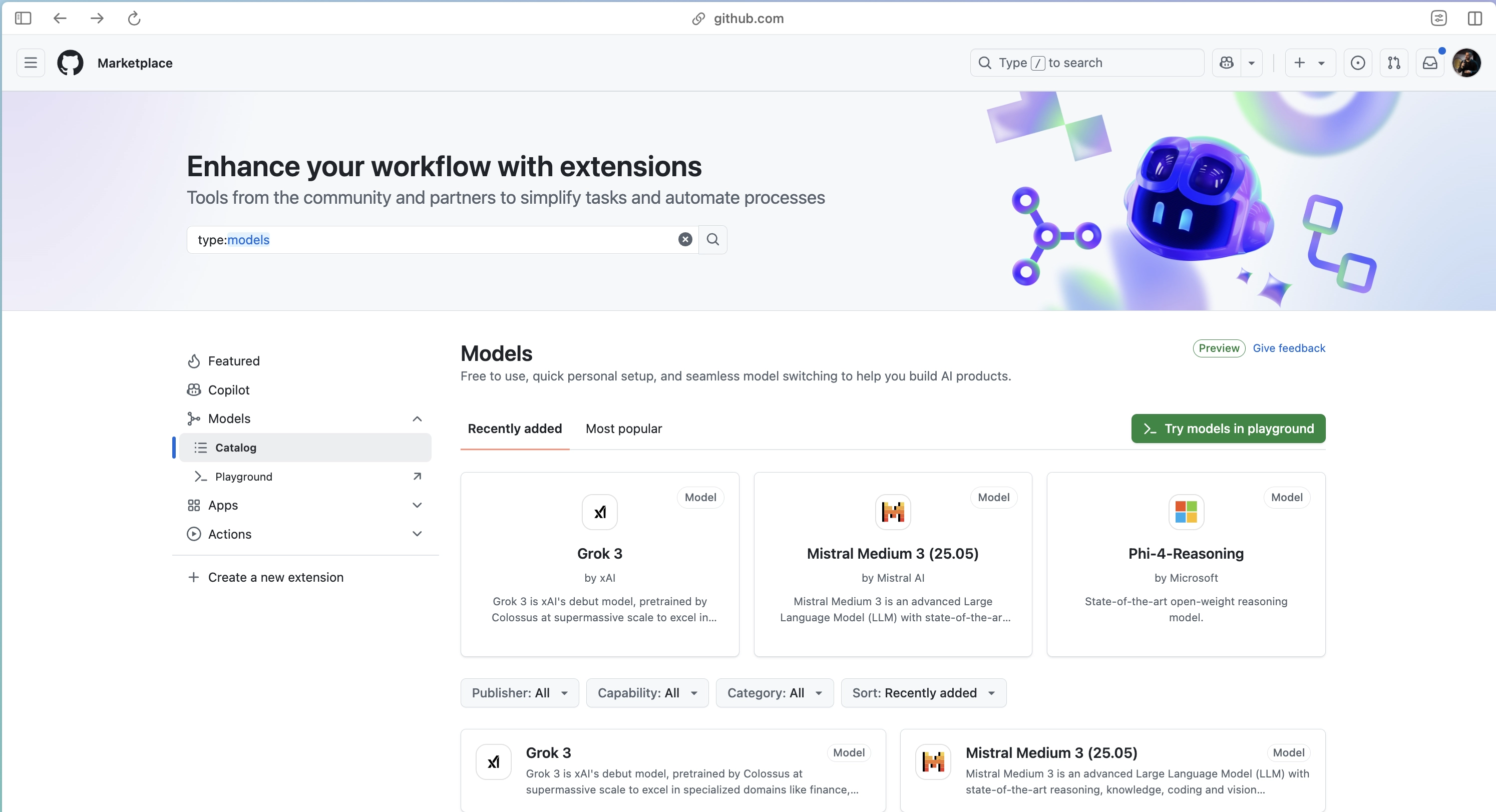

Models Catalog

To start, visit the GitHub Models Marketplace. Use the Select a Model dropdown to:

- View available models

- Check provider and SDK support

- Launch any model in the Playground

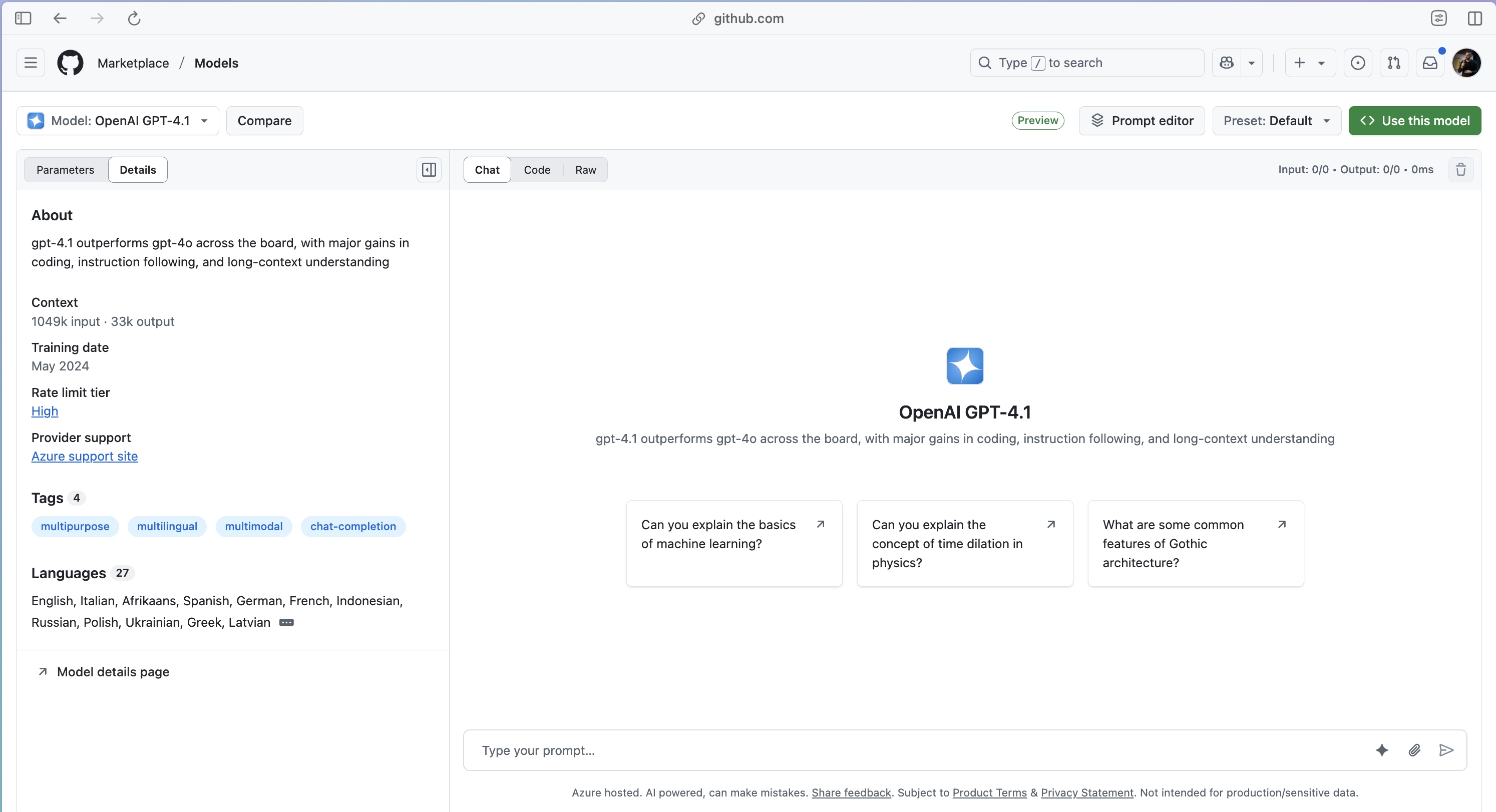

Playground

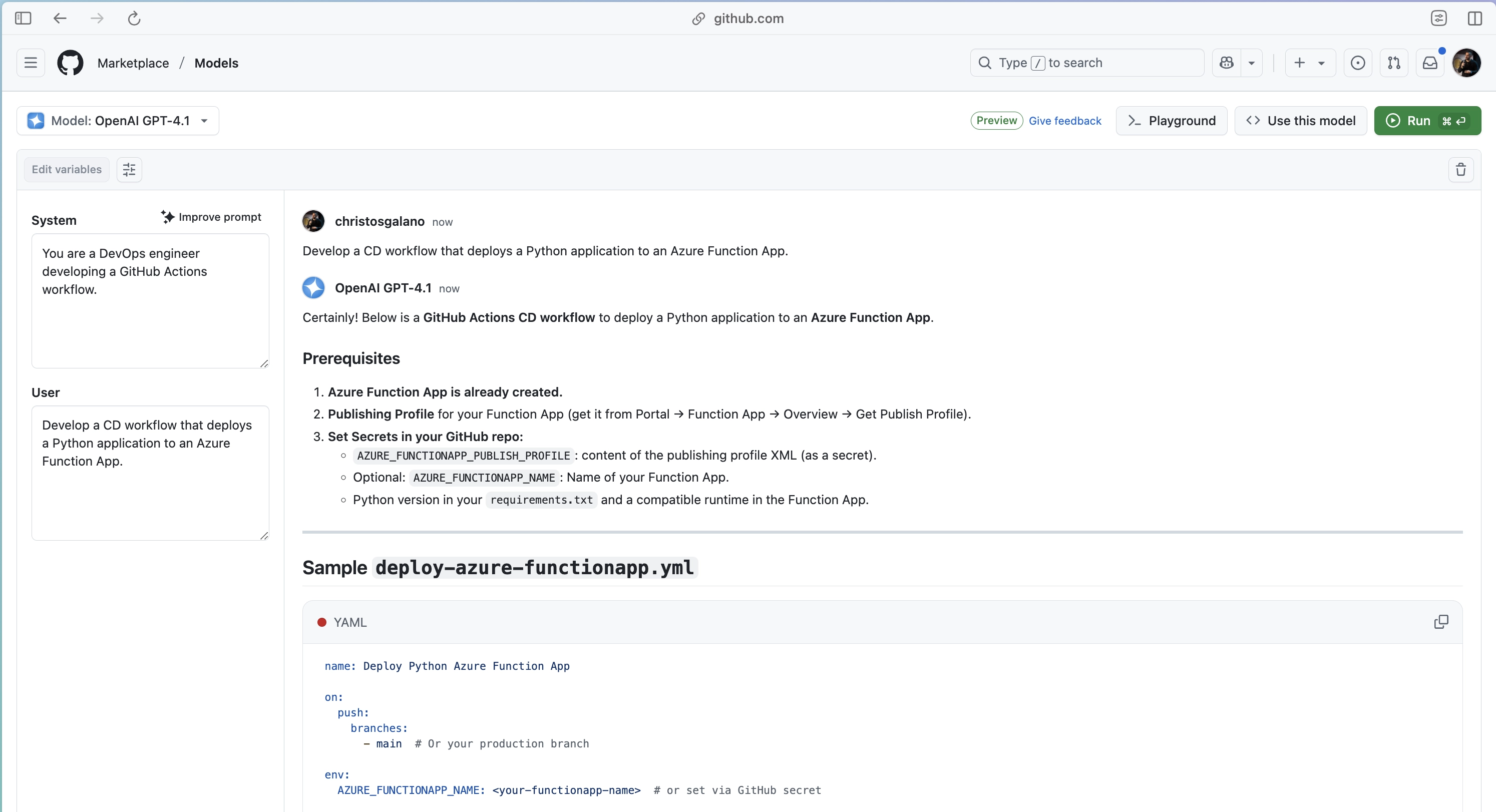

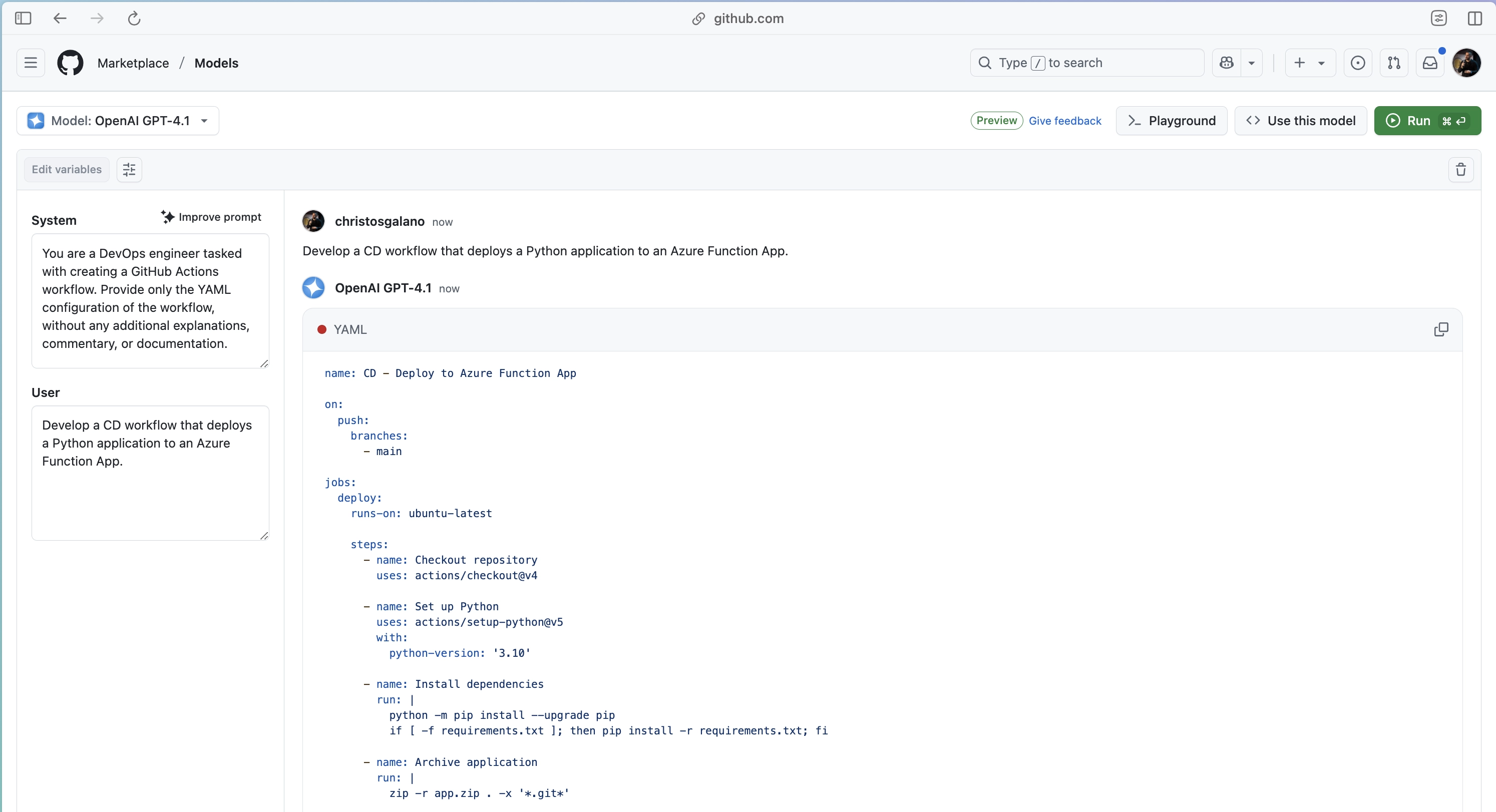

The Playground allows for prompt development and testing.

You can:

- Enter system and user prompts

- Adjust temperature, token limits, and other parameters

- View responses from the model in real time

- Compare outputs from multiple models

- Export test configurations as code

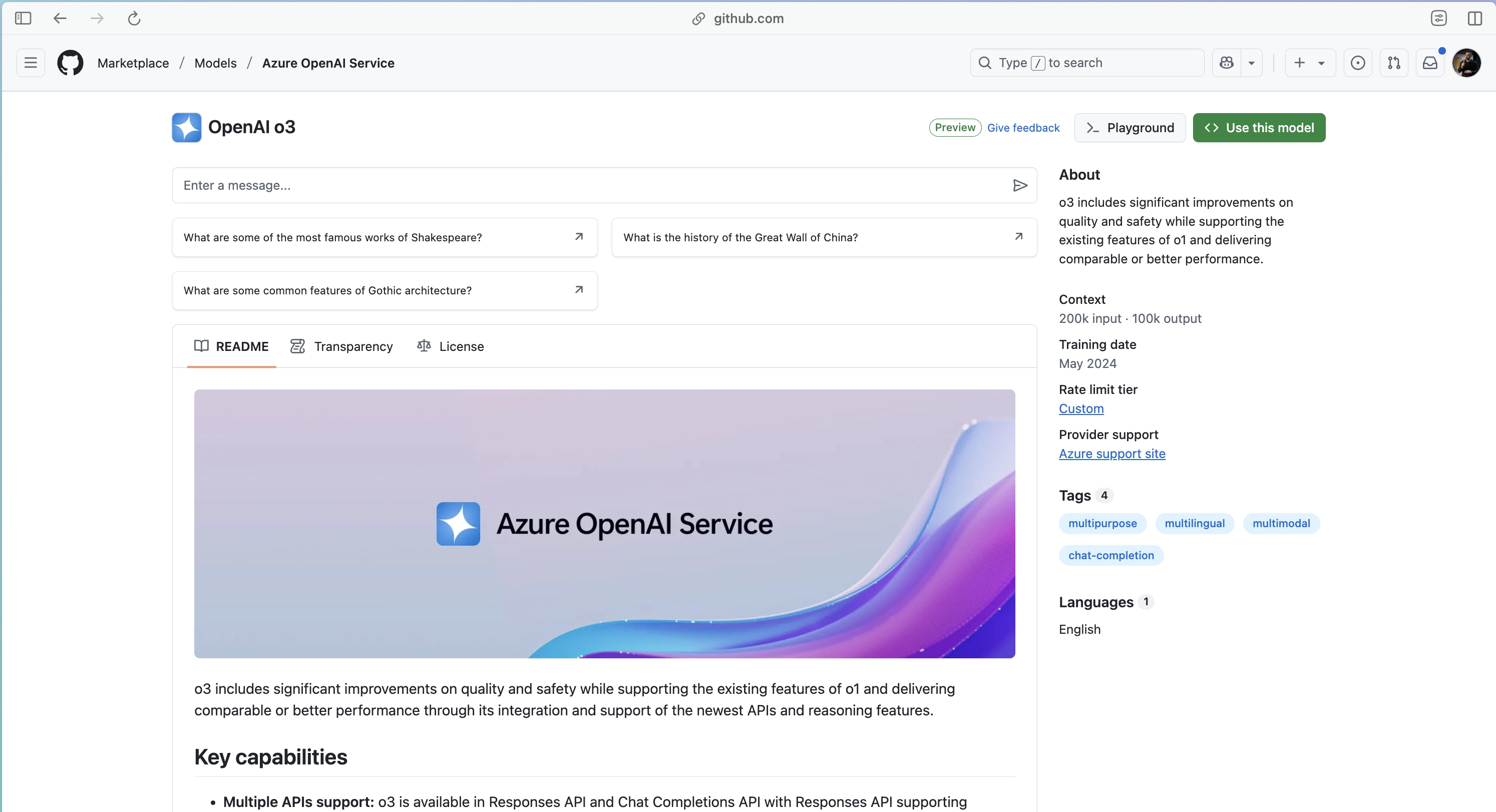

Model Overview

Model details such as context window, release date, and supported features are visible on selection.

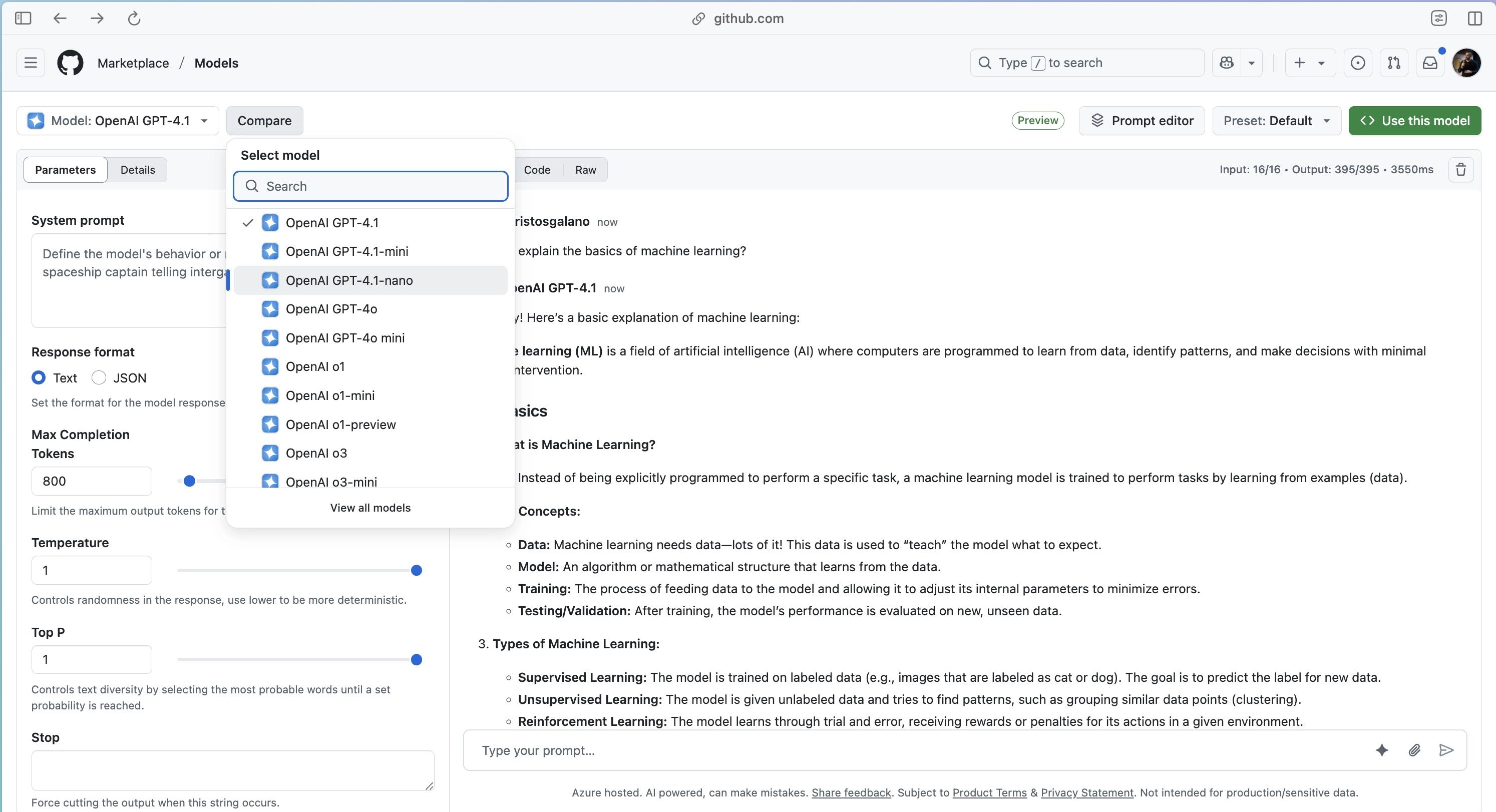

You can switch between:

- Parameters: modify model behavior

- Chat / Code / Raw: view response in different formats

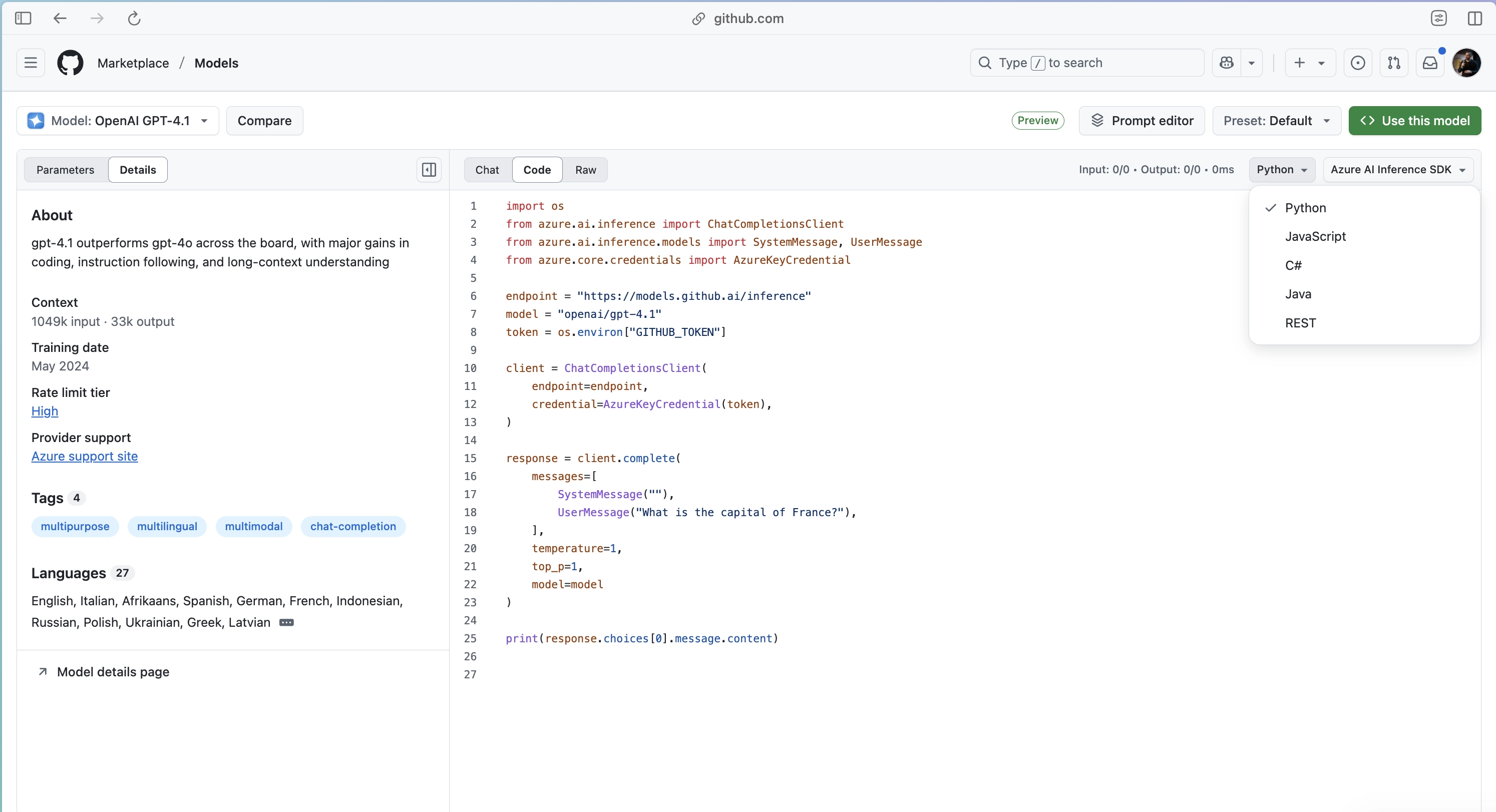

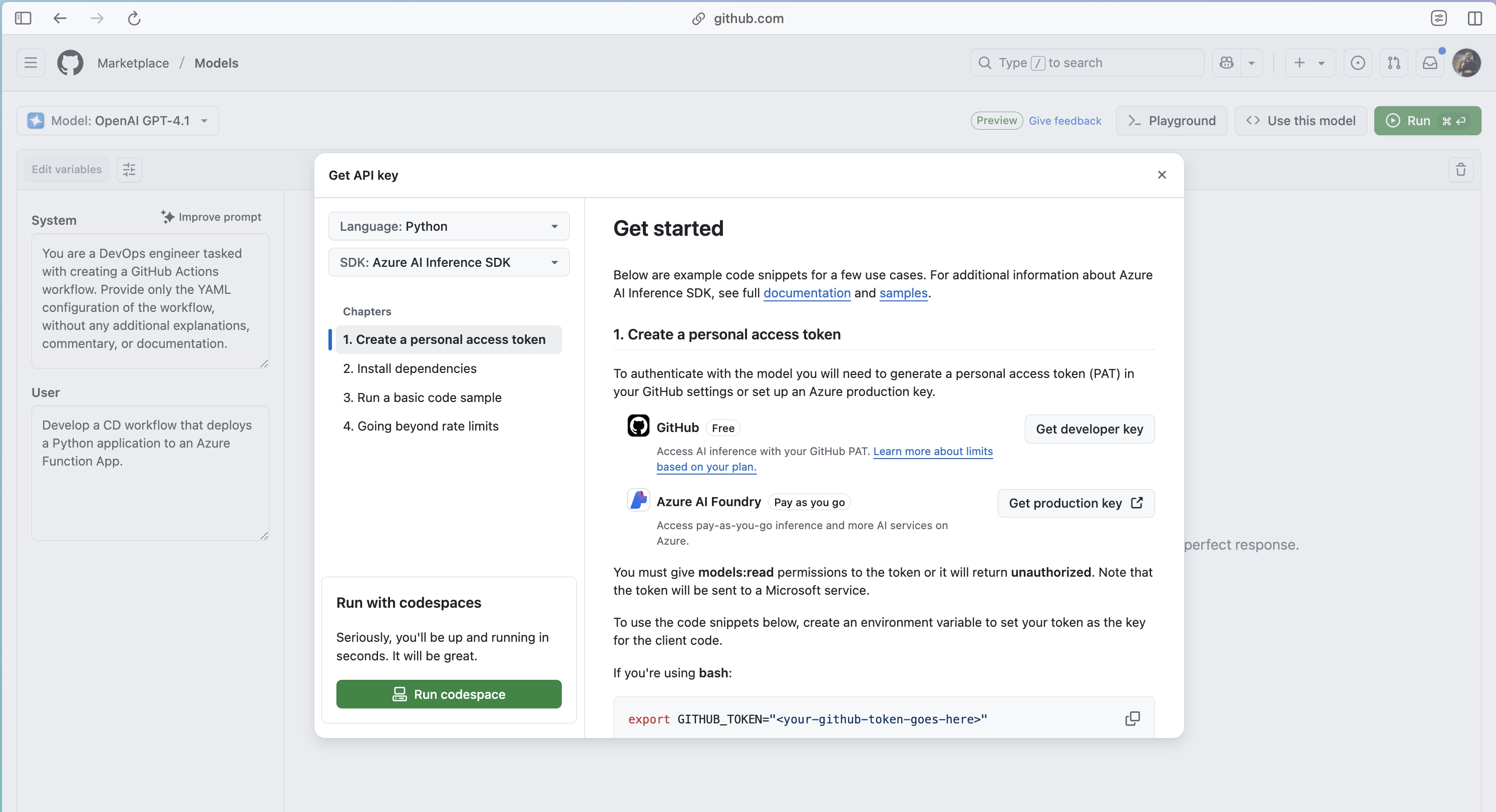

Code View

The Code tab shows how to call the model using SDKs or direct API requests.

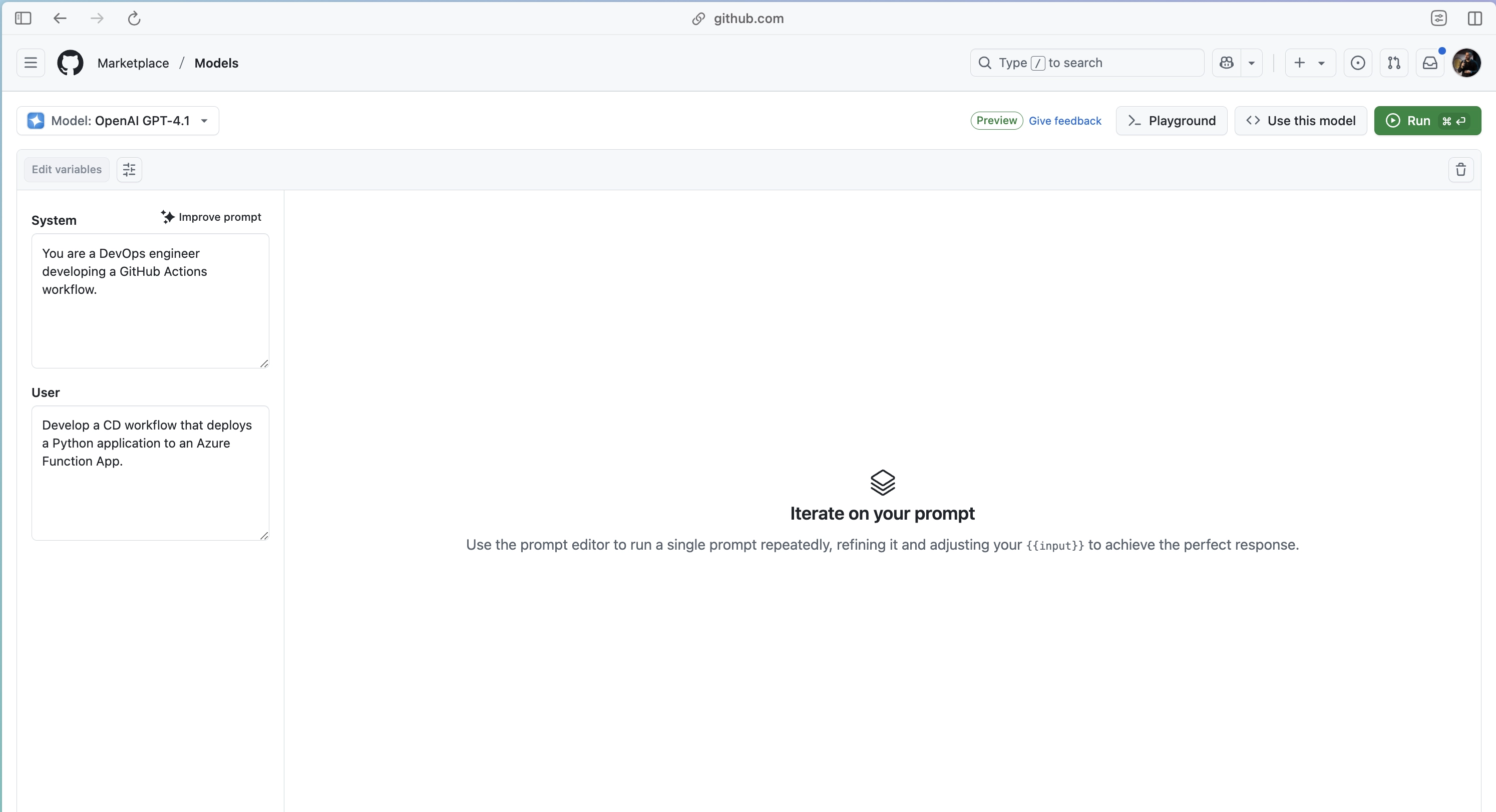

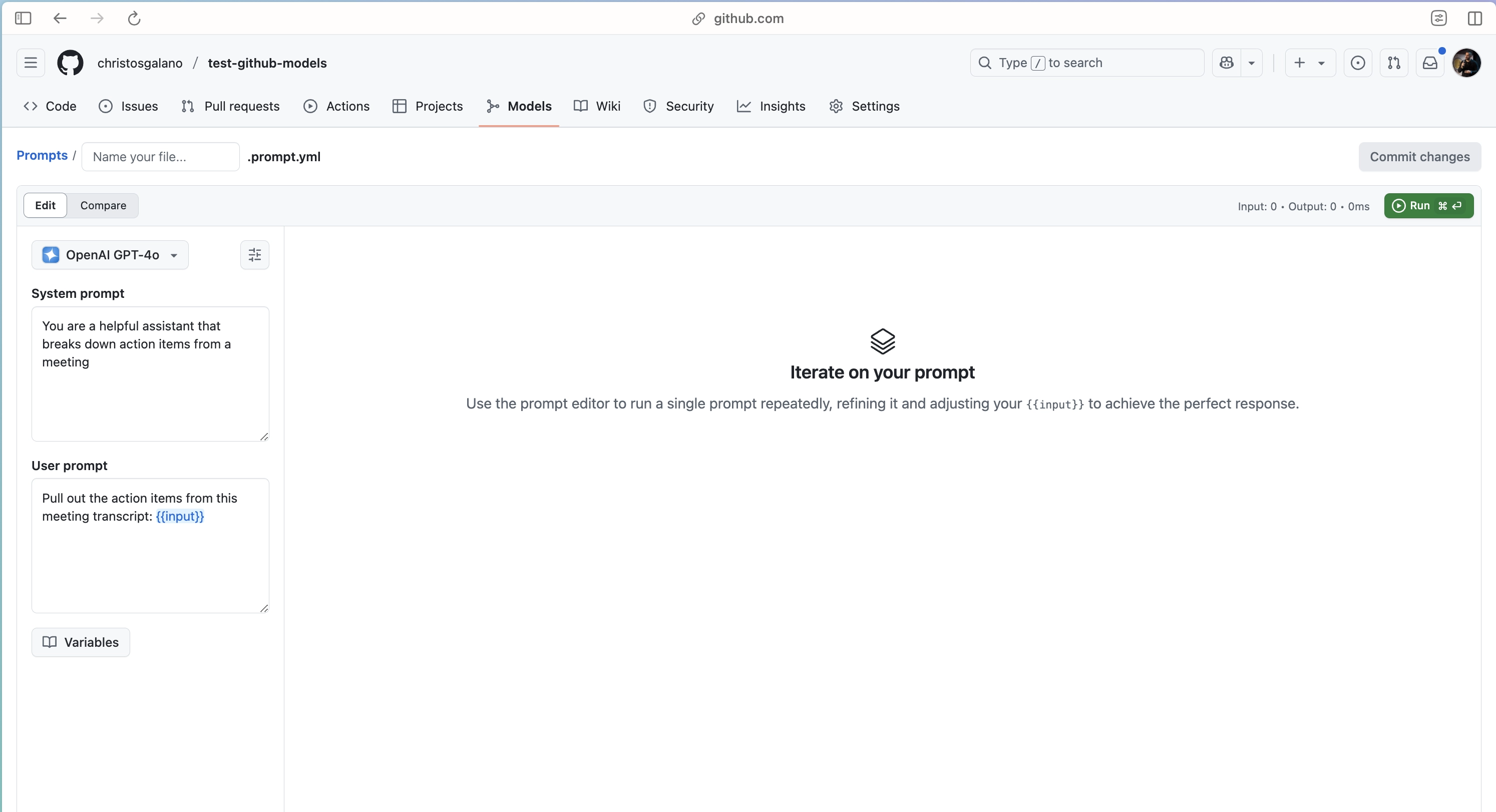

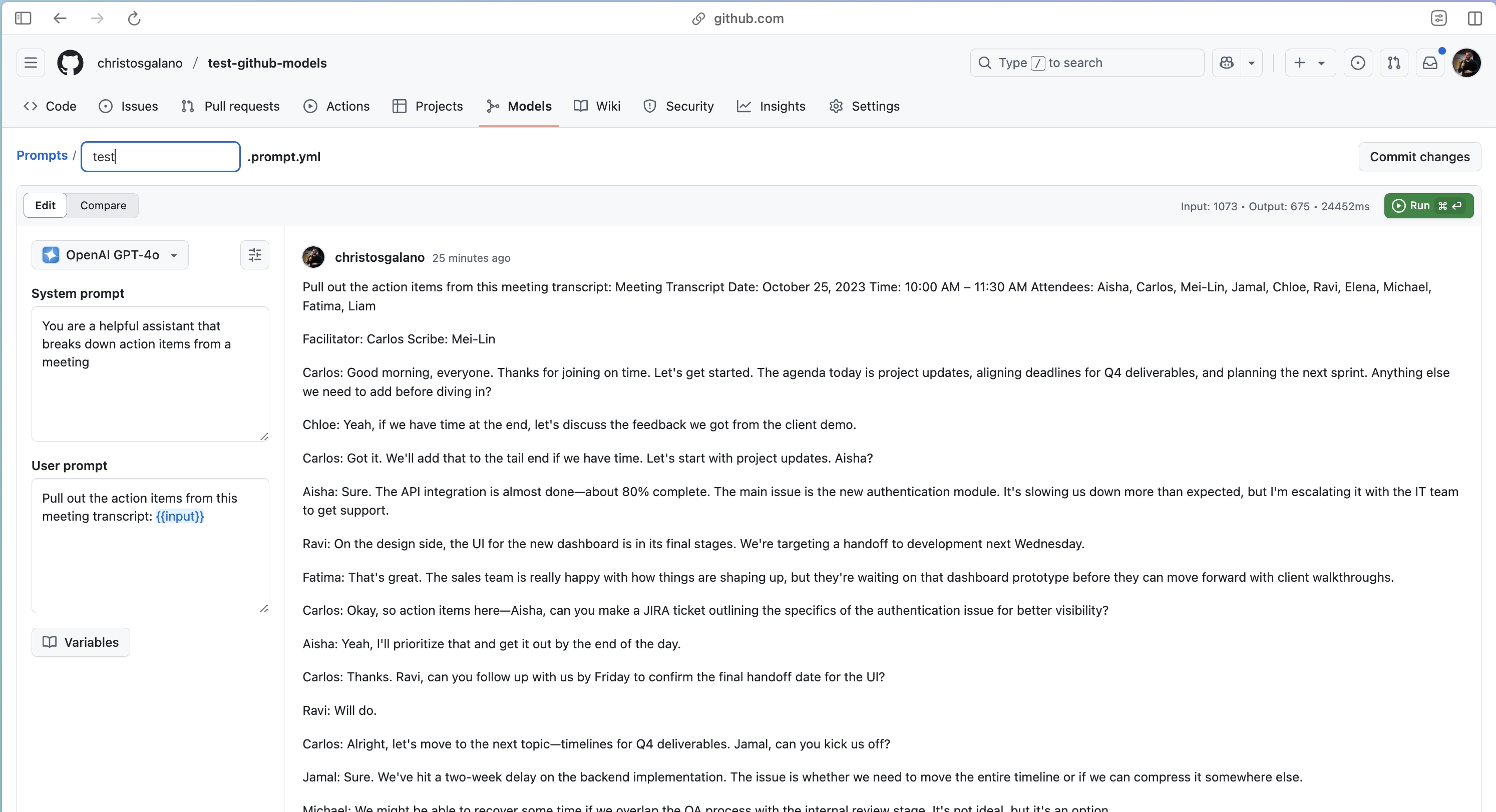

Prompt Editor

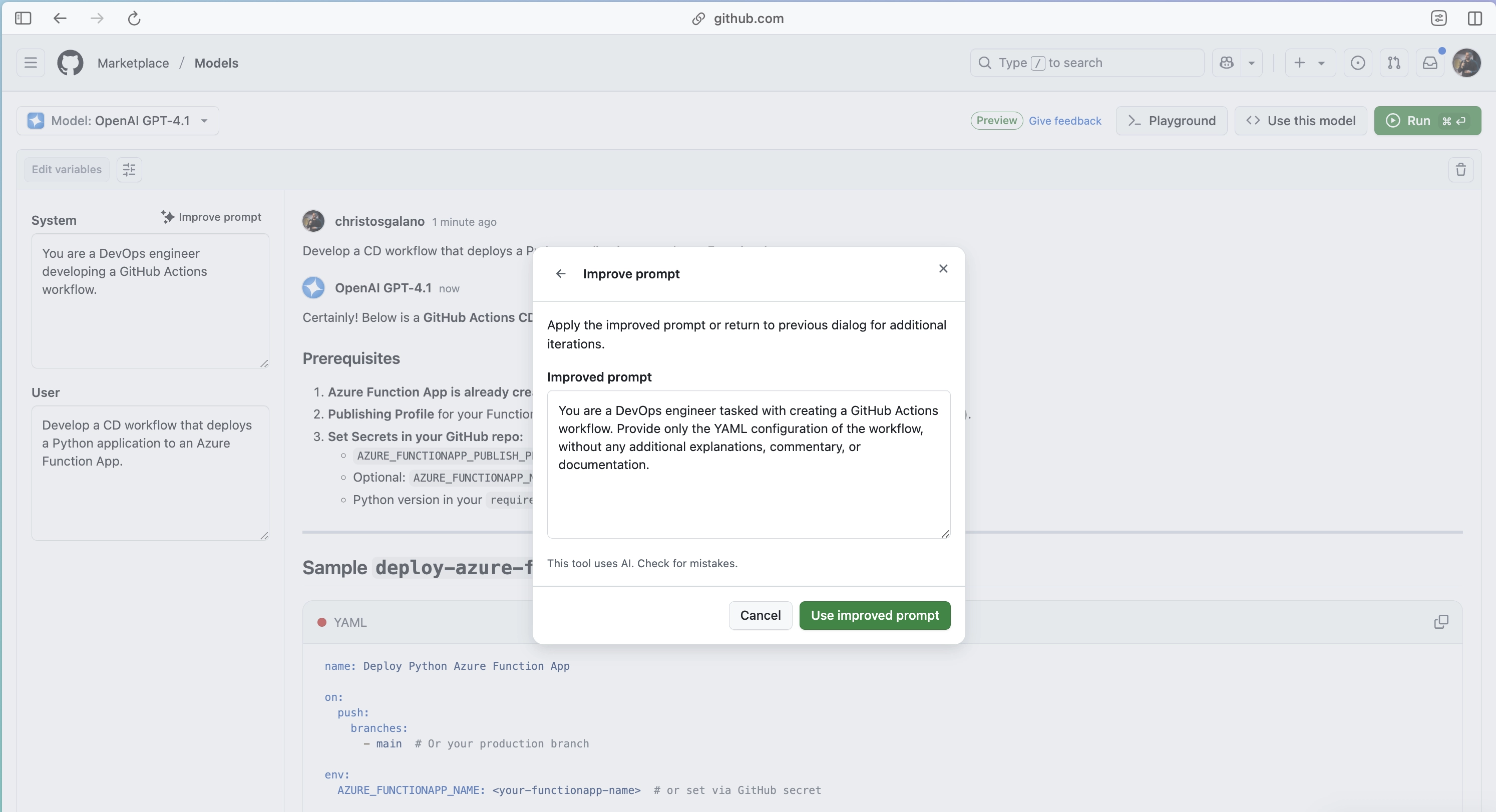

Use the Prompt Editor for single-turn prompts. This is useful when you’re testing short inputs or working with clear system instructions.

If needed, use Improve prompt to refine it using suggestions generated by another model.

Run again to verify improvements.

Model Comparison

The Compare function allows you to evaluate different models using the same prompt and input. This is useful for checking consistency, latency, or output quality.

Use this Model

Once a model is selected, click Use this model to begin using it in a configured prompt file.

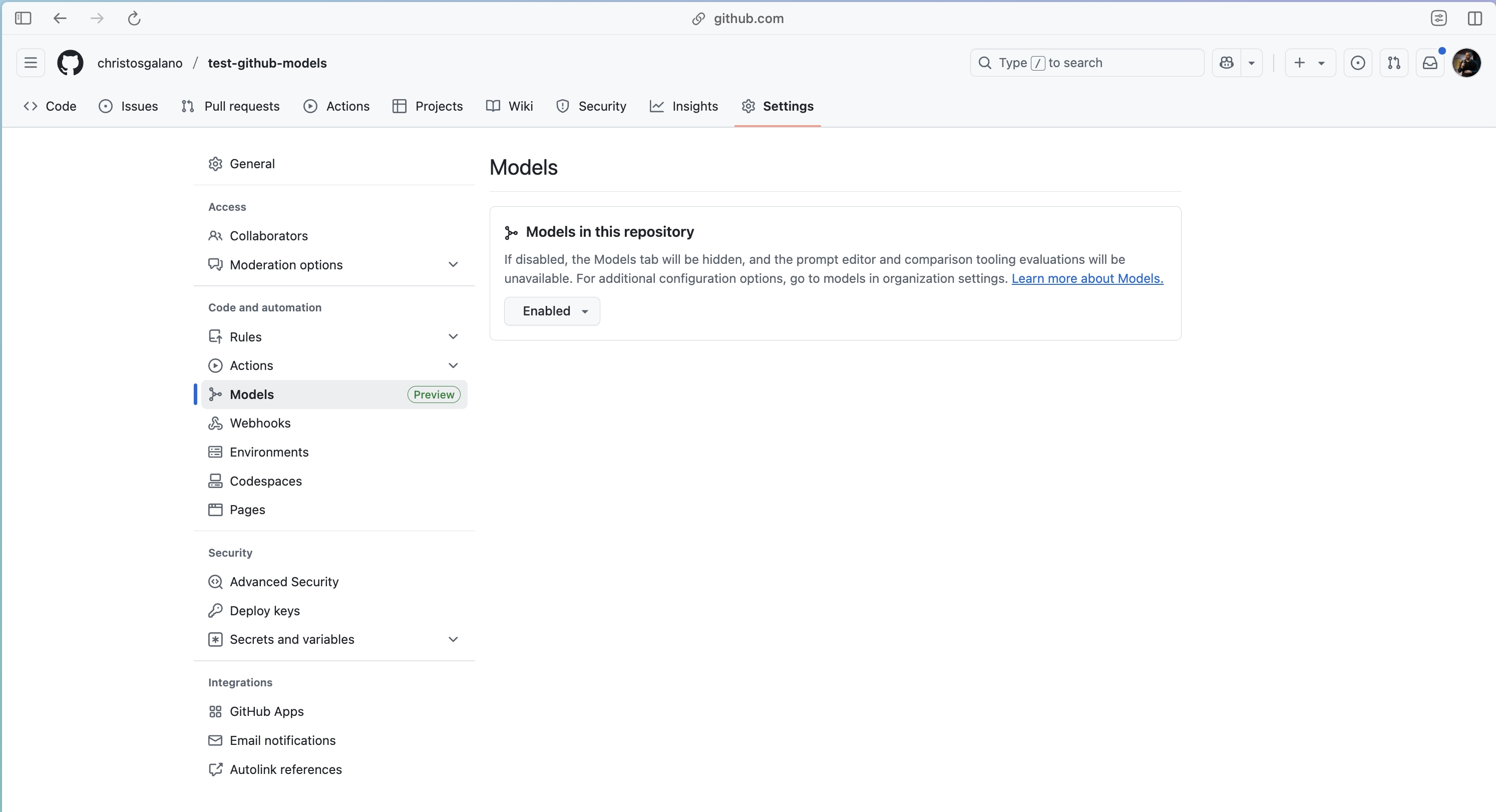

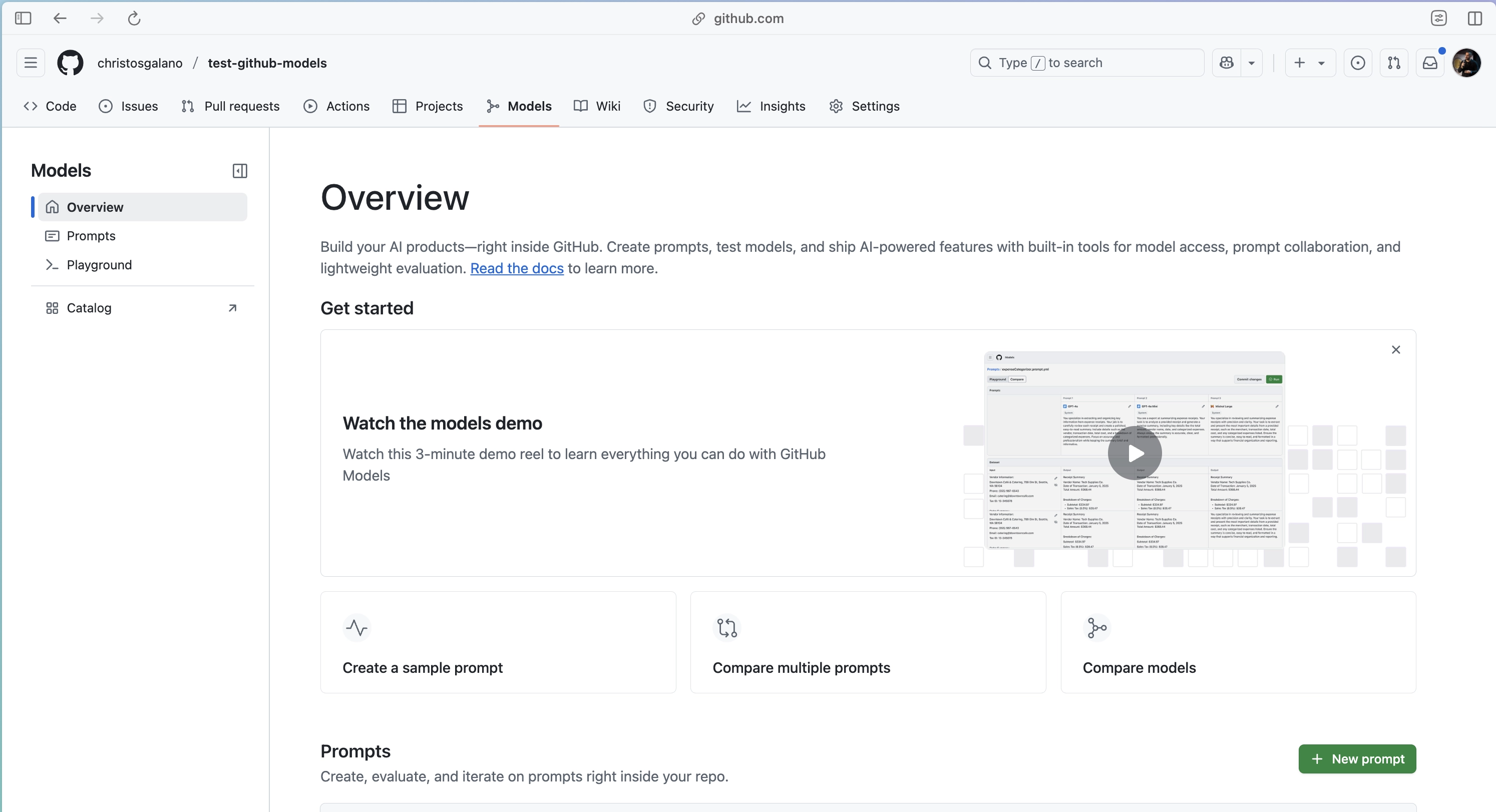

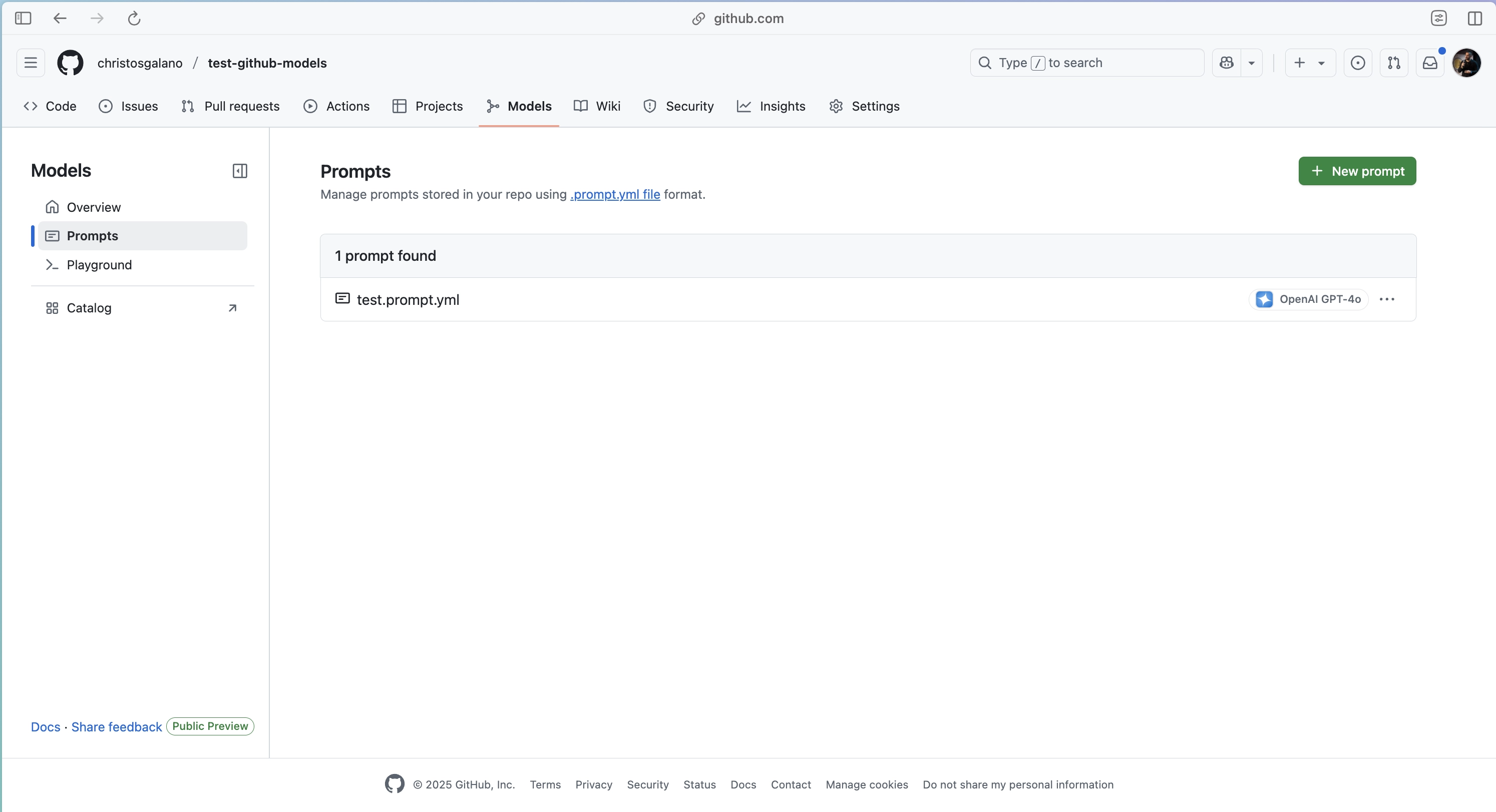

Repository Usage

GitHub Models can also be used inside your repository. Enable it in Settings → Models.

This adds a Models tab to the repo interface.

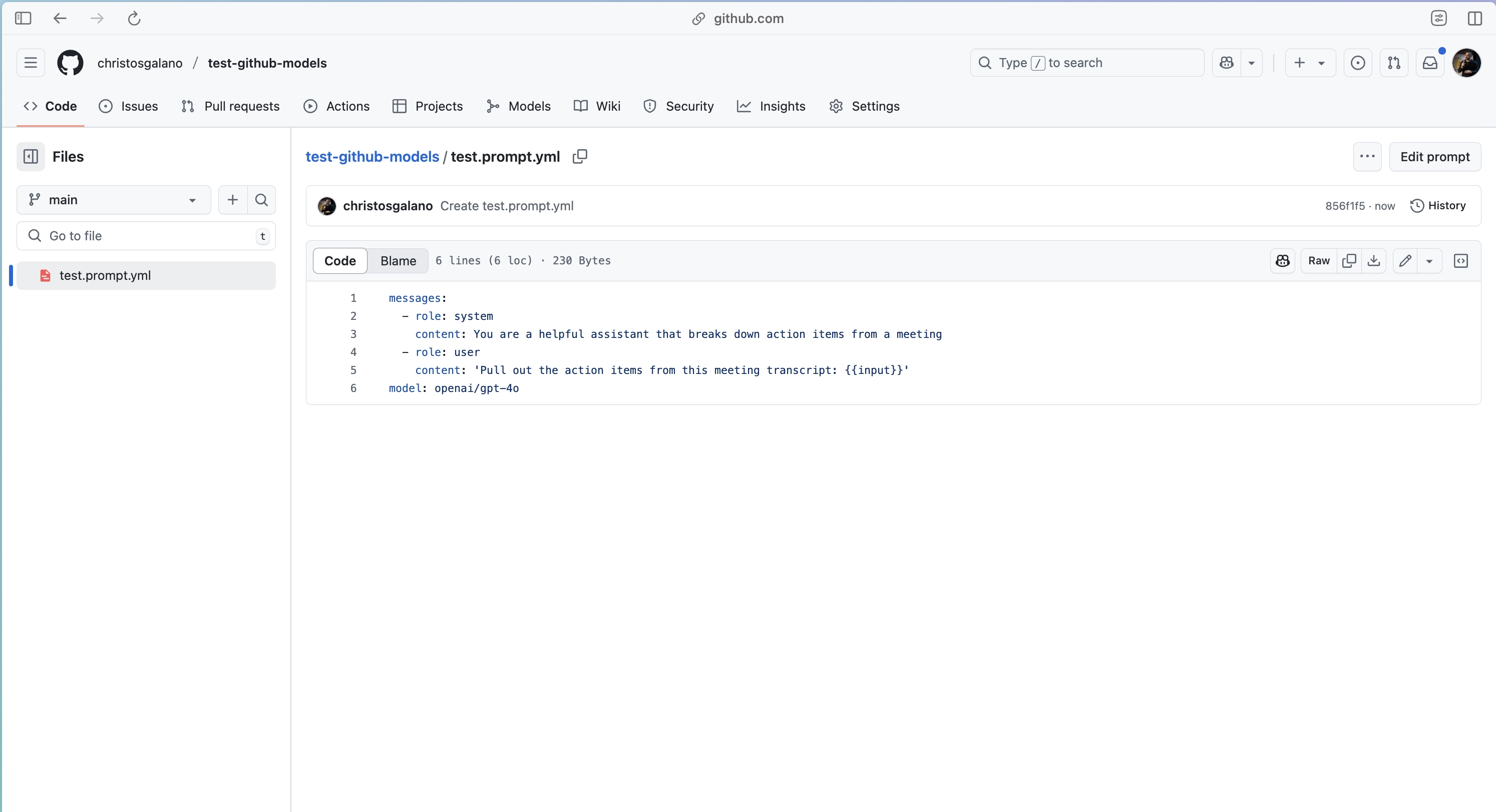

Create a Prompt

Use the UI to create a .prompt.yaml file. This file defines:

- A

systemprompt - A

userprompt (can include variables like ``)

Store & Share

Prompts are stored as version-controlled YAML files. They can be reviewed and updated via pull requests like any other code artifact.

Run & View Output

You can execute prompts directly from the Models tab and inspect the output inline.

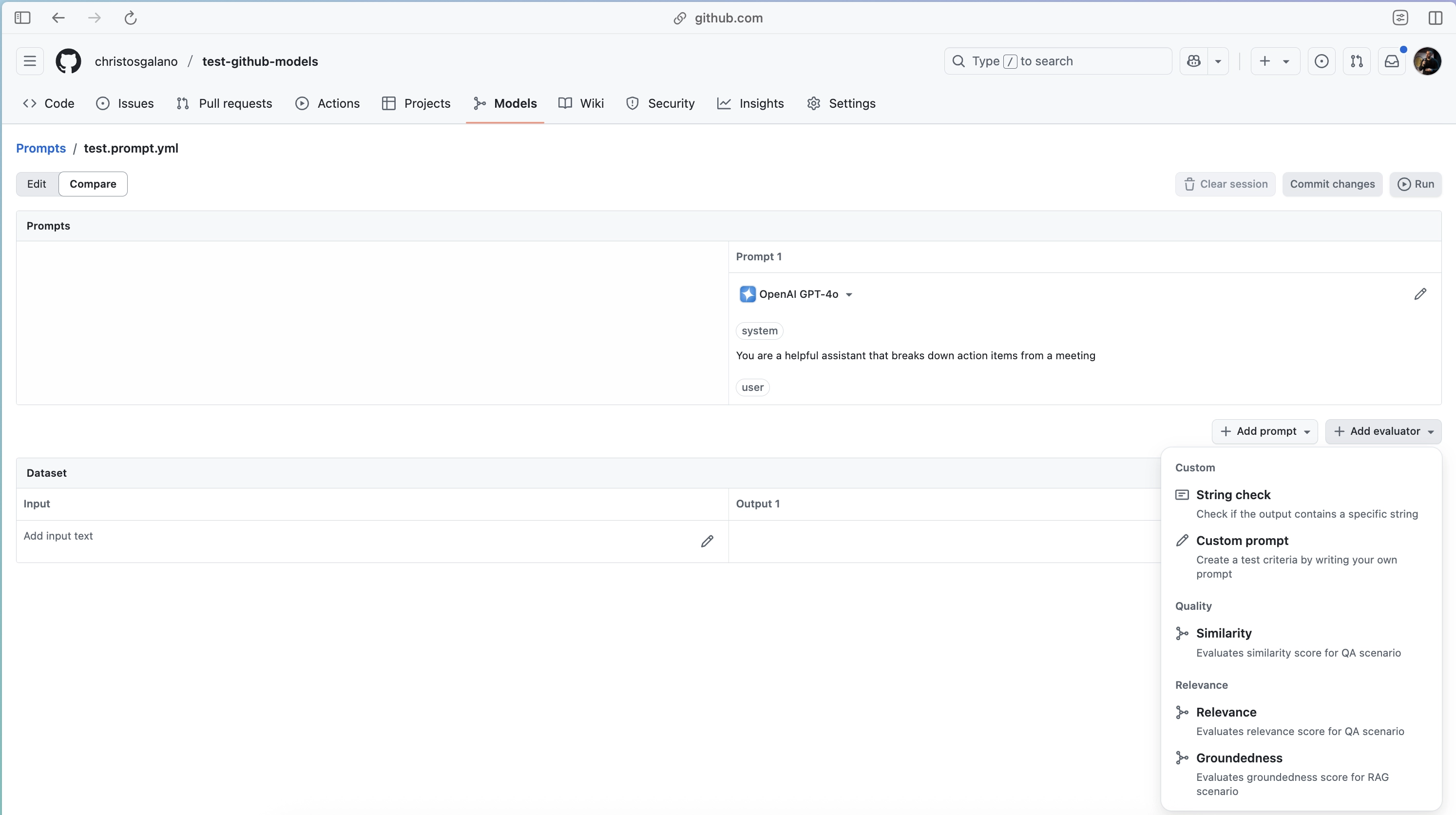

Evaluation

GitHub Models supports evaluation of model outputs using standard and custom metrics.

Available evaluators:

- Similarity: How close the result is to a reference output

- Relevance: Whether the output answers the input clearly and directly

- Groundedness: Whether the output stays true to the context

- Custom: User-defined checks for string presence or scoring logic

Evaluations can be applied to multiple prompt variants or model outputs. Results appear side by side for comparison.

Leave a comment